A new AI system called the Semantic Decoder has the ability to translate a person’s brain activity into a continuous stream of text, while listening to a story or filming a story.

The system was developed by researchers at the University of Texas at Austin who say it may help people who are mentally aware but unable to speak physically, such as those who have had strokes, to communicate clearly again.

The scientist’s work was published in the journal Nature Neuroscience, and relies – in part – on an adapter model similar to those used by Open AI’s ChatGPT and Google Bard.

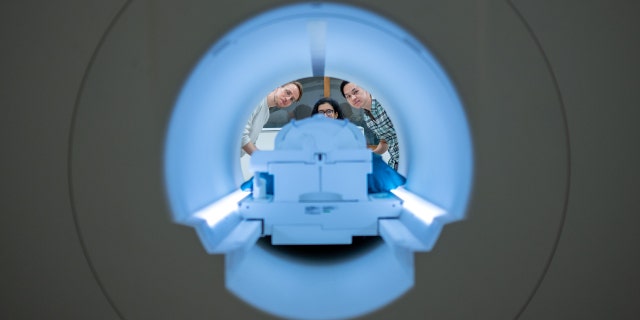

Brain activity is measured using an fMRI scanner after intense decoder training, in which the individual listens to hours of podcasts in the scanner.

Artificial intelligence helps detect early signs of breast cancer in some US hospitals

Ph.D. Student Jerry Tang prepares to collect brain activity data at the University of Texas Biomedical Imaging Center at Austin. The researchers trained their semantic decoder on dozens of hours of brain activity data from participants, collected in an fMRI scanner. picture (Nolan Zink/University of Texas at Austin)

Participants open up to decoding their thoughts later, then listen to a new story or imagine telling a story, allowing the machine to generate matching text from brain activity alone.

While the score is not written word for word, it captures the essence of what is being said or thought.

About half the time, when the decoder is trained to monitor the participant’s brain activity, the machine produces text that matches closely—sometimes accurately—the intended meanings of the original words.

One participant who listened to a speaker said they did not have their driver’s license yet. Their thoughts were translated as “You haven’t even started learning to drive yet”.

The researchers said they had the model decode continuous language for long periods of time with complex thoughts.

Alex Huth (left), Shelly Jin (center), and Jerry Tang (right) prepare to collect brain activity data at the University of Texas Biomedical Imaging Center at Austin. The researchers trained their semantic decoder on dozens of hours of brain activity data from participants, collected in an fMRI scanner. (Nolan Zink/University of Texas at Austin)

AI CHATBOT’S ‘BEDSIDE METHOD’ IS PREFERRED THAN TRADITIONAL DOCTORS THROUGH TRACTION FRIEND, ACCORDING TO BLIND STUDY

In addition to having the participants listen or think about the stories, they asked the participants to watch four short, silent video clips while inside the scanner, and the semantic decoder was able to use their brain activity to accurately describe specific events from the videos.

Notably, the researchers tested the system on people they had not been trained on and found that the results were incomprehensible.

Alex Huth (left), discussing the Semantic Decoder Module project with Jerry Tang (center) and Shelly Jin (right) University of Texas Biomedical Imaging Center at Austin. (Nolan Zink/University of Texas at Austin)

The system is currently impractical for use outside the laboratory due to its dependence on fMRI time. However, the researchers believe this work could carry over into more portable brain imaging systems.

“We take very seriously concerns that it could be used for bad purposes, and have worked to avoid this,” study leader Jerry Tang, a doctoral student in computer science, said in a statement. “We want to make sure that people only use these kinds of technologies when they want to be, and that it helps them.”

Click here for the FOX NEWS app

The authors said the system could not have been used by someone without their knowledge and that there were ways someone could protect it from decoding their thoughts – for example, thinking about animals.

“I think now, while technology is in such an early state, it’s important to be proactive by enacting policies that protect people and their privacy,” Tang said. “Regulating what these devices can be used for is also very important.”

“Analyst. Web buff. Wannabe beer trailblazer. Certified music expert. Zombie lover. Explorer. Pop culture fanatic.”

More Stories

It certainly looks like the PS5 Pro will be announced in the next few weeks.

Leaks reveal the alleged PS5 Pro name and design

Apple introduces AI-powered object removal in photos with latest iOS update